What Just Happened

Anthropic released Claude Sonnet 4.6, the newest generation of its production-focused model tier, pushing significant improvements across agentic workflows, coding tasks, reasoning benchmarks, and real-world computer-use environments. The release continues Anthropic’s strategy of compressing flagship-level capability into its most deployable model, making advanced reasoning and automation far more accessible for everyday engineering workloads.

Anthropic CEO - Dario Amodei

ARTIFICIAL INTELLIGENCE

🌎 Let’s Talk Benchmarks

Claude Sonnet 4.6 Benchmarks

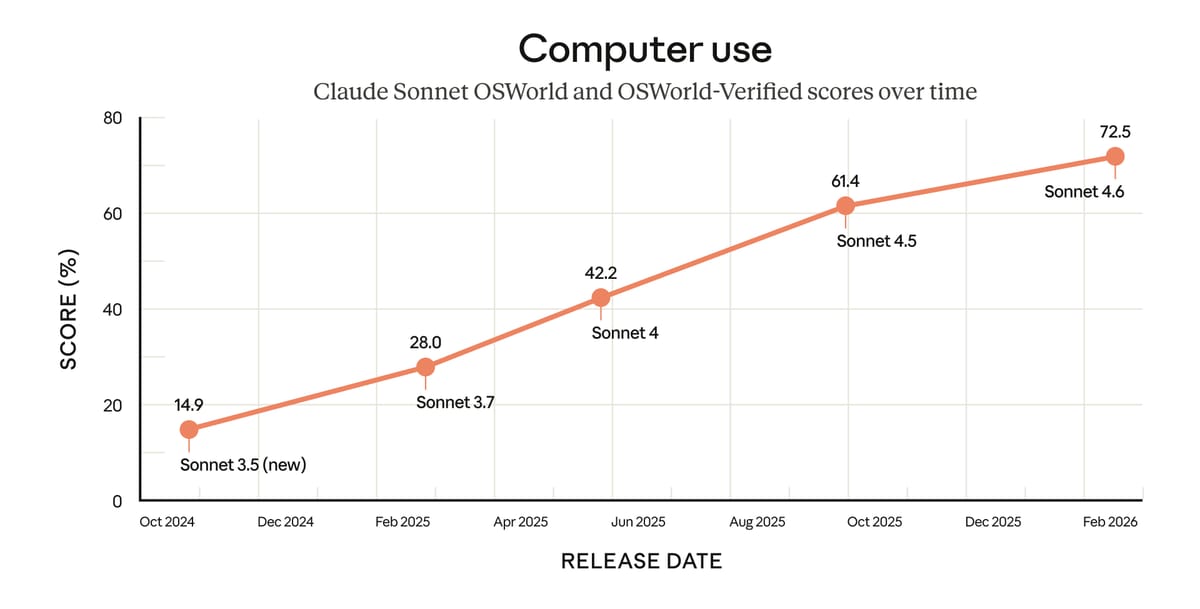

Key highlights from early benchmark disclosures show a consistent pattern across the areas that matter most for real-world deployment. Sonnet 4.6 reaches 59.1% on agentic terminal coding, 72.5% on OSWorld-Verified computer-use tasks, and roughly 91.7% tool-use reliability in structured workflow environments, alongside ~89.9% graduate-level reasoning performance (GPQA Diamond). It also posts leading results across office and automation-style productivity benchmarks, signaling strong performance in the types of multi-step tasks organizations actually want AI systems to handle.

Taken together, these results point to a broader shift in model optimization priorities. Instead of focusing primarily on conversational intelligence, Sonnet 4.6 is clearly tuned for workflow execution — writing code inside real environments, interacting with software tools, retrieving the right context, and completing structured tasks end-to-end. The trajectory suggests that production-tier models are increasingly being designed not just to provide answers, but to reliably function as operational agents inside real digital systems.

What’s The HYPE?

Computer-Use Performance Momentum

Computer-use Benchmarks Via Anthropic

One of the most important strategic signals in this release is how quickly computer-use capability is improving. Over just a few Sonnet generations, the model has moved from handling tightly scoped instructions to increasingly navigating real software interfaces, operating system workflows, and multi-step tasks that resemble how people actually work on a computer. This matters more than a single benchmark gain because it represents a structural shift: models are evolving from systems that primarily respond to prompts into systems that can take actions across tools and environments.

In practice, stronger computer-use reliability is what determines whether AI automation works outside of demos. Many organizations have found that reasoning quality was already strong, but workflows failed when models needed to interact with real applications, data connectors, or complex task sequences. The steady improvement we’re seeing suggests agent-based systems are moving closer to production-grade reliability, shifting the real competitive frontier from “which model is smartest” to which model can consistently execute real work inside digital systems..

Learn How To Create Income With AI!

Turn AI into Your Income Engine

Ready to transform artificial intelligence from a buzzword into your personal revenue generator?

HubSpot’s groundbreaking guide "200+ AI-Powered Income Ideas" is your gateway to financial innovation in the digital age.

Inside you'll discover:

A curated collection of 200+ profitable opportunities spanning content creation, e-commerce, gaming, and emerging digital markets—each vetted for real-world potential

Step-by-step implementation guides designed for beginners, making AI accessible regardless of your technical background

Cutting-edge strategies aligned with current market trends, ensuring your ventures stay ahead of the curve

Download your guide today and unlock a future where artificial intelligence powers your success. Your next income stream is waiting.

AI Assistant

Real-World Workflow Example

MCP Connectors Within Claude: Pull In Context From Outside The Spreadsheet!

The model’s expanded tool-use capabilities and connector integrations allow agents to operate directly across real business systems. Instead of working with static inputs, agents can analyze live spreadsheet data, connect to enterprise sources, perform multi-step operational or financial analyses, and generate reports that combine context from multiple tools, enabling workflows that previously required manual coordination.

More broadly, this reflects the continued shift from standalone chat experiences toward workflow-native AI systems, where models are embedded inside everyday software environments and help execute real operational tasks end-to-end rather than simply generating responses.

Why This Matters

The competitive frontier is now shifting toward agent reliability, tool orchestration, and long-horizon reasoning, rather than single-prompt intelligence. Sonnet 4.6 strengthens Anthropic’s position in the enterprise deployment tier by delivering near-flagship reasoning performance while maintaining the cost-efficiency and responsiveness required for production systems.

Bottom Line

Claude Sonnet 4.6 reinforces an important market trend: the most valuable AI models are no longer just the smartest — they are the ones that can reliably execute workflows across tools, environments, and extended reasoning chains at scale.