⚡ What’s DeepSeek-OCR?

DeepSeek-AI just dropped a mind-bending open-source model — DeepSeek-OCR — that literally turns text into images, then decodes it back to text again.

They call it Optical 2D Mapping, and the numbers are wild:

🧩 Up to 10× compression with ≈ 97 % accuracy

⚙️ Processes 200 000 pages per GPU per day

💻 Fully open source on GitHub + Hugging Face

🧠 Built to smash LLM context window limits & cut token costs

📊 How It Performs

DeepSeek-OCR dominates benchmarks — achieving top-tier edit-distance (↓ better) while using fewer vision tokens per image compared to Qwen and InternVL encoders.

The chart shows DeepSeek-OCR (red dots) outperforming every other encoder in accuracy vs. token efficiency — meaning it’s both smarter and lighter than traditional OCR models.

🧩 Real-World Test

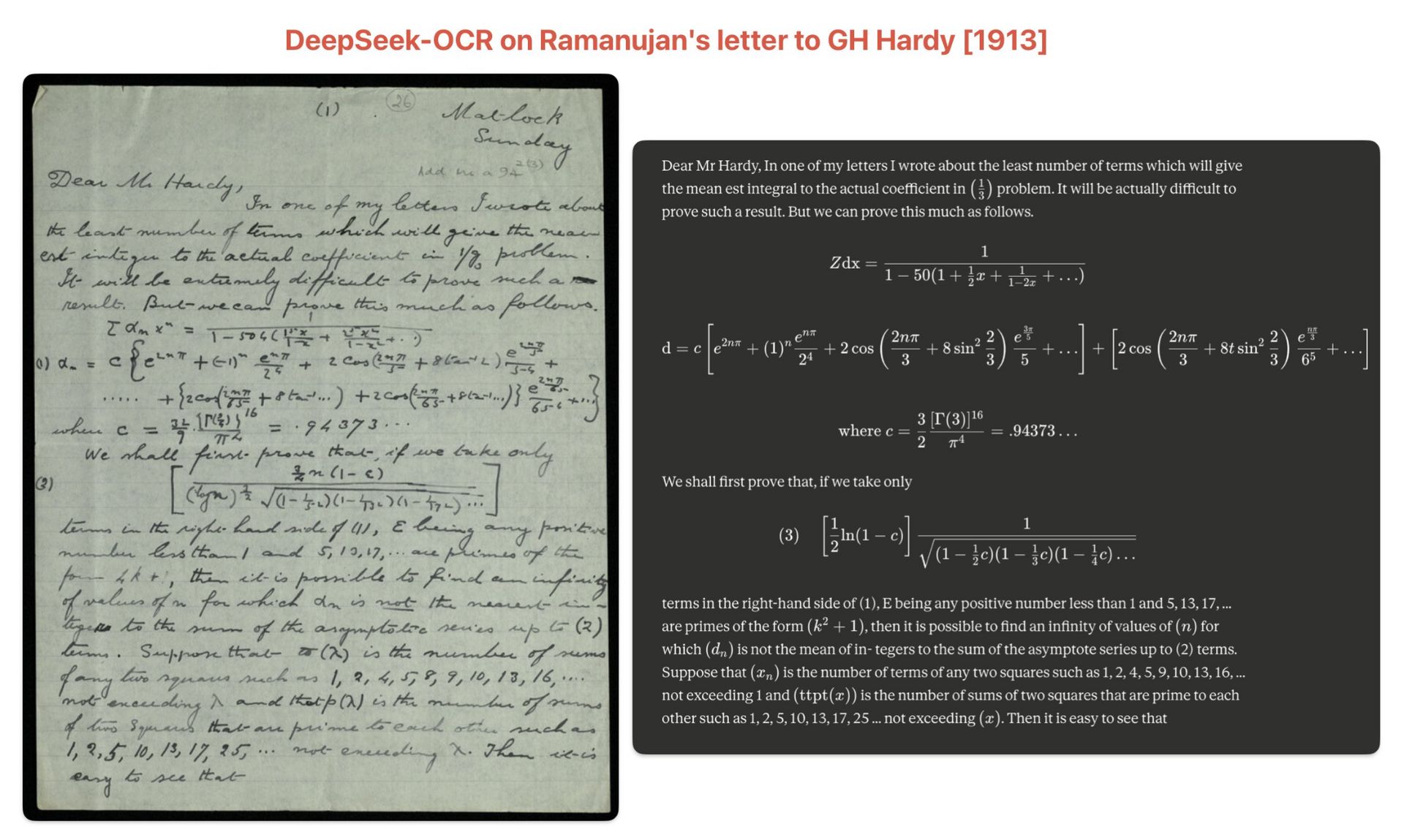

DeepSeek-OCR reconstructs Ramanujan’s 1913 handwritten letter to GH Hardy — turning century-old math notes into perfectly decoded LaTeX-style text and formulas.

That example alone shows how well this model handles complex layouts, handwriting, and scientific notation — something traditional OCR systems still struggle with.

The AI-Native CRM That Builds Itself in 60 Seconds!

Click BELOW For FREE TRIAL!

Introducing the first AI-native CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows

Join industry leaders like Granola, Taskrabbit, Flatfile and more.

🧠 Under the Hood

DeepSeek-OCR uses a two-part system:

A Vision Encoder that maps text → visual tokens

A Text Decoder that reconstructs the text with astonishing fidelity

It’s especially efficient below 10× compression — past that, accuracy gradually drops (~60 % at 20×).

Still, the breakthrough is massive: you can fit 10× more context into your model without paying 10× the cost.

💡 Why It Matters

Imagine feeding an entire research paper, PDF, or book into GPT without hitting token limits.

DeepSeek-OCR makes that real by compressing language into vision tokens, slashing token usage up to 90 %.

It’s basically a ZIP file for LLMs.

What this means:

📚 Load massive documents without truncation

💰 Cut prompt & compute costs dramatically

⚡ Enable long-context agents that actually remember everything

The Bottom Line

DeepSeek just redefined how we think about context.

Turning text into visual data isn’t just an OCR upgrade — it’s a new layer of intelligence for LLMs.

The teams that adopt this early will run bigger models, faster, and cheaper than everyone else.

The question isn’t if AI will compress the world — it’s whether you’ll be the one building with it.