🧠 What Is “Agentic Vision”?

Today, Google quietly upgraded Gemini 3 Flash with what it calls Agentic Vision — and this isn’t just “better image responses.”

Instead of a model trying to eyeball an image once and hoping it’s right, Gemini 3 Flash now treats vision as an active investigation: it plans, writes and runs code, and refines its understanding step by step.

This change fundamentally shifts what multimodal AI can do in practice. No longer static — now agentic.

The keys:

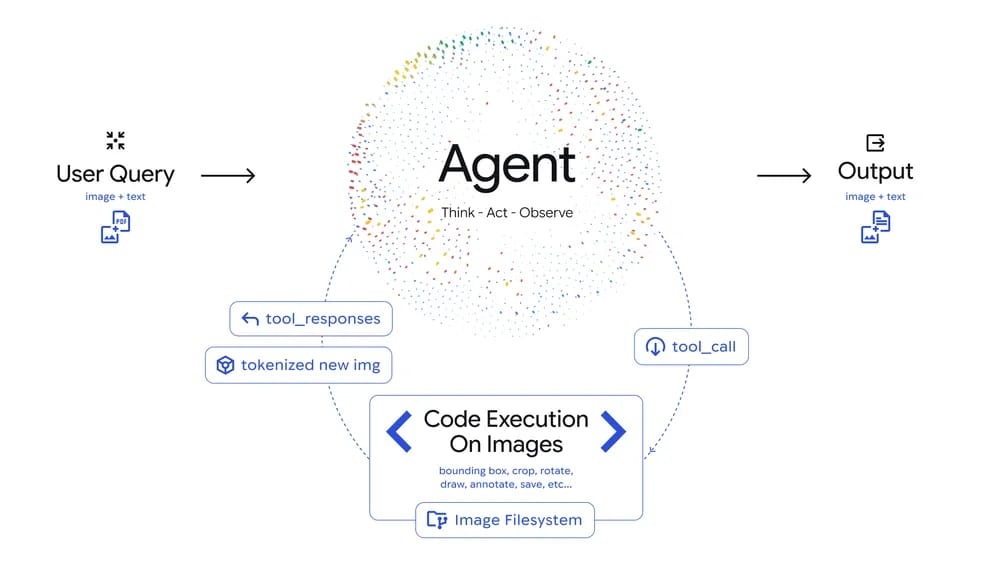

Active image reasoning: Gemini 3 Flash now runs a “Think → Act → Observe” loop when processing visuals.

Code execution integrated: The model can generate Python code to zoom in, crop, annotate, and compute with images instead of guessing.

Grounded answers: Each manipulated image is fed back into context before final output.

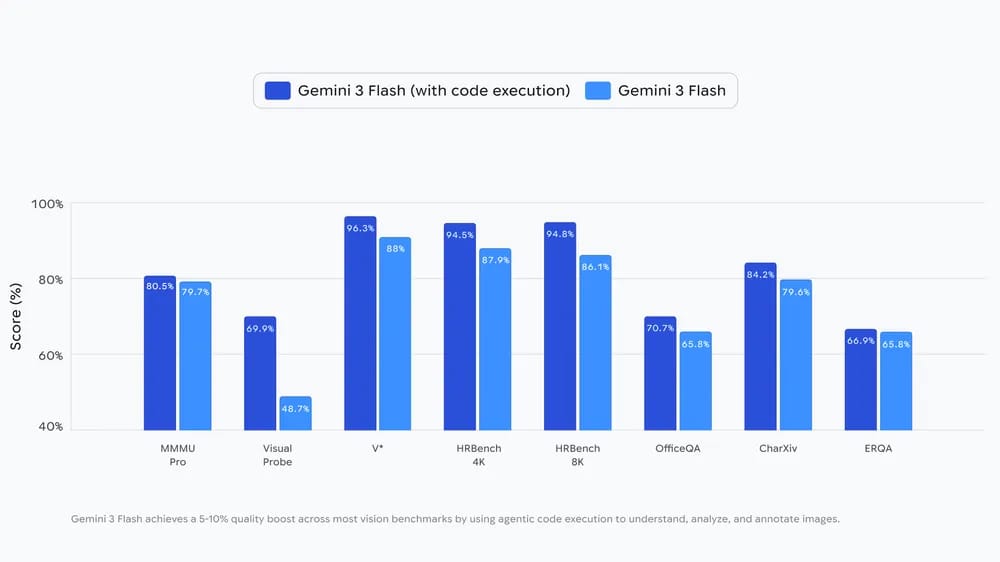

Quality boost: Early results show a consistent 5–10% improvement on vision benchmarks compared with traditional static image processing.

This is not just “vision + chat.” It’s vision + reasoning + action.

ARTIFICIAL INTELLIGENCE

🌎 🛠 What This Actually Enables

With Agentic Vision, developers can:

Zoom and inspect fine details (e.g., serial numbers on microchips)

Annotate and draw on images (bounding boxes, labels, and counts)

Use visual data in calculations (generate charts and numerically grounded insights)

Integrate with tools and workflows easily via code execution.

Instead of returning a guess like “looks like 42,” Gemini 3 Flash can now verify that number by cropping the image, labeling elements, and counting them precisely.

What’s The HYPE?

🧩 Why This Matters

Gemini 3 Flash vs Gemini 3 Flash (Code Execution) Benchmarks

Vision has long been a weak spot for language models: single static passes, fuzzy detail recognition, and hallucinated visual responses. Agentic Vision addresses that by adding tool-backed visual reasoning, which is a big step toward trustworthy multimodal AI.

This matters in real use cases such as:

Document analysis (tables, charts, receipts)

Engineering inspection (plans, schematics, quality control)

Medical imaging contexts

Data extraction from visuals

Visual automation with agents

Suddenly, AI isn’t just describing what it sees. It’s acting on what it sees.

Learn How To Create Income With AI!

How can AI power your income?

Ready to transform artificial intelligence from a buzzword into your personal revenue generator

HubSpot’s groundbreaking guide "200+ AI-Powered Income Ideas" is your gateway to financial innovation in the digital age.

Inside you'll discover:

A curated collection of 200+ profitable opportunities spanning content creation, e-commerce, gaming, and emerging digital markets—each vetted for real-world potential

Step-by-step implementation guides designed for beginners, making AI accessible regardless of your technical background

Cutting-edge strategies aligned with current market trends, ensuring your ventures stay ahead of the curve

Download your guide today and unlock a future where artificial intelligence powers your success. Your next income stream is waiting.

AI Assistant

⚙️ How It Works

Agentic Vision introduces an agentic loop:

Think: Analyze the image and plan steps.

Act: Write and run Python code to manipulate/interpret visuals.

Observe: Feed results back into context for final answers.

This is far closer to visual problem solving than passive vision classification.

🧠 Bottom Line

Agentic Vision upgrades Gemini 3 Flash from “static vision” to active visual reasoning. For developers and teams building real-world tools, this means less guesswork, more grounded outputs, and a new class of image-enabled workflows.

This feels like a small feature change on the surface — but it’s really a glimpse of how agentic AI will interact with the visual world: not as observers, but as investigators.

And that’s a big deal.