What just happened:

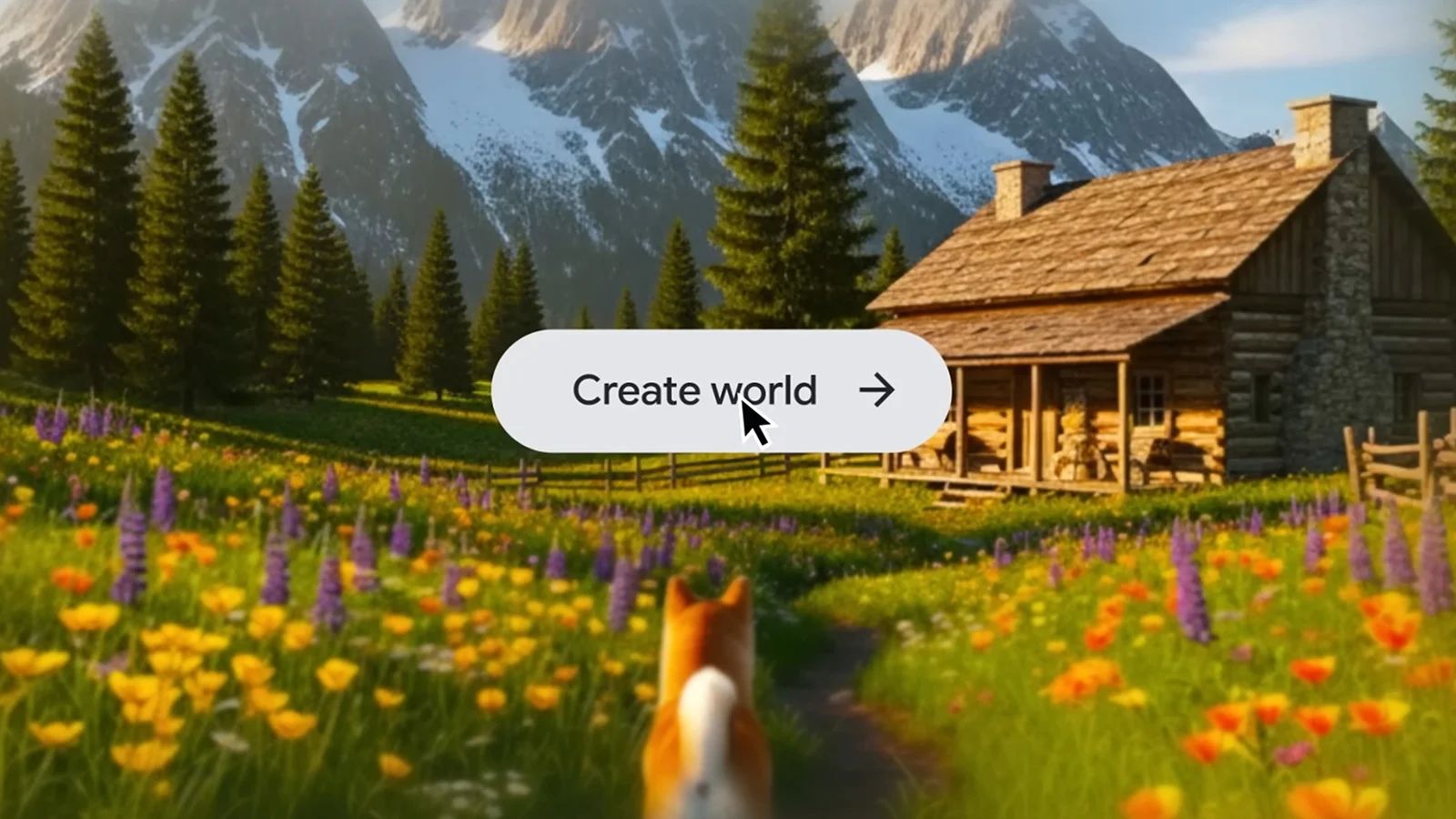

Google DeepMind, the AI research arm of Google, has rolled out Project Genie — an experimental prototype that lets people generate, explore, and remix fully interactive, AI-created worlds using text and image prompts. It’s powered by the latest iteration of their world model, Genie 3, and is currently available to Google AI Ultra subscribers in the U.S. (18+) as an early research experience.

🚀 Why Project Genie Matters

1. From passive content to dynamic worlds

Unlike traditional AI image or video generators that output static scenes or fixed clips, Project Genie produces real-time, navigable environments that evolve as you move through them. These worlds are rendered one frame at a time, responding dynamically to your actions.

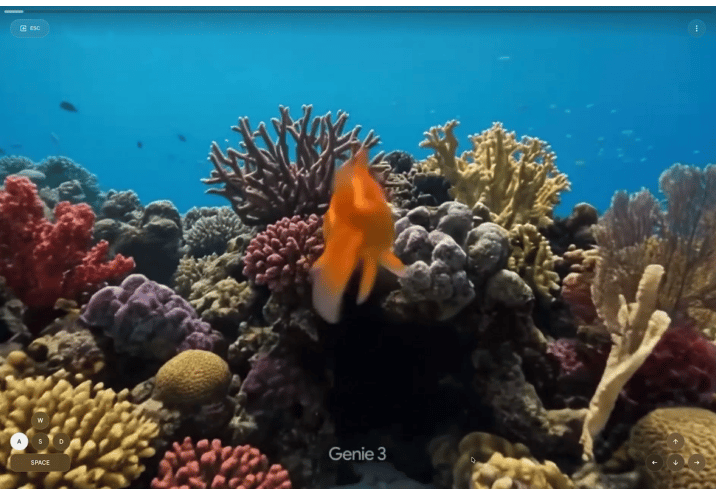

2. Built on Genie 3

At its core is Genie 3, DeepMind’s latest world model — a neural system trained to understand environments and simulate how they unfold over time. It can generate photorealistic and stylized worlds that maintain continuity as you explore.

3. Text + image prompts = worlds you control

Creators can:

Sketch out a world with words or pictures

Walk, fly, or drive through it in real time

Remix environments by altering prompts or building on others’ creations

4. Not game-ready — but a prototype with a huge vision

Project Genie has limits today: session lengths are capped (often ~60 seconds per generated world), physics and controls aren’t flawless yet, and it’s early-stage research rather than a finished product.

Showcase

🧠 The Bigger Strategic Picture

Why DeepMind thinks world models matter:

World models like Genie seek to give AI a sense of how environments behave, not just how to label or describe them — a capability seen as a key step toward more general forms of AI reasoning and planning. This goes beyond static media generation into simulation and interaction territory.

How Your Ads Will Win in 2026

Great ads don’t happen by accident. And in a world flooded with AI-generated content, the difference between “nice idea” and “real impact” matters more than ever.

Join award-winning creative strategist Babak Behrad and Neurons CEO Thomas Z. Ramsøy for a practical, science-backed webinar on what actually drives performance in modern advertising.

They’ll break down how top campaigns earn attention, stick in your target’s memory, and build brands people remember.

You’ll see how to:

Apply neuroscience to creative decisions

Design branding moments that actually land

Make ads feel instantly relevant to real humans

In 2026, you have to earn attention. This webinar will show you exactly how to do it.

Impact

🎮 Industry & Ecosystem Impact

Gaming developers watch closely:

Early market reactions show game industry stocks dipping as players consider how AI-generated worlds could disrupt traditional development pipelines if these tools mature further.A creative playground (for now):

Designers, storytellers, and researchers are already experimenting with marshmallow castles, surreal landscapes, and other dreamscapes — a sign that even early versions can be compelling creative tools.

In case you missed it

🌐 What’s Next?

Short-term:

Expanded access beyond the U.S. user trial

Increased world duration and physics fidelity

More intuitive controls

Mid-term:

Integration with AR/VR, training sims, and digital twin tools

Shared world marketplaces and community content

Long-term:

World models that simulate realistic physics, multi-agent interactions, and complex social environments — potentially a foundation for future embodied AI and robotics training.

✨ Bottom Line

Project Genie is Revolutionary

Project Genie marks a new frontier in AI content creation — shifting from static images and clips to fully interactive, generative environments. While early, it hints at a future where you can prompt entire worlds and step inside them, blurring the line between game engine, simulation, and generative AI.