Replit Changes The Game

Replit just pushed into animation generation inside its Agent workflow.

You can now prompt something like:

“Create a 20-second animated product walkthrough with modern typography and smooth transitions.”

Replit generates the animation as code, renders it in a preview pane, and lets you iterate conversationally. You tweak pacing, layout, motion curves, transitions — and export to MP4.

This isn’t diffusion-based video like Runway. And it’s not cinematic generation like OpenAI’s Sora.

It’s programmatic motion graphics generated inside a dev environment.

That distinction matters.

Replit CEO - Amjad Masad

ARTIFICIAL INTELLIGENCE

🌎 Why this is strategically interesting

Most AI video tools generate pixels.

Replit generates structured systems.

These animations are built using front-end style composition. That means they’re modular, editable, and reproducible. You’re not regenerating a scene from scratch every time — you’re refining an underlying structure.

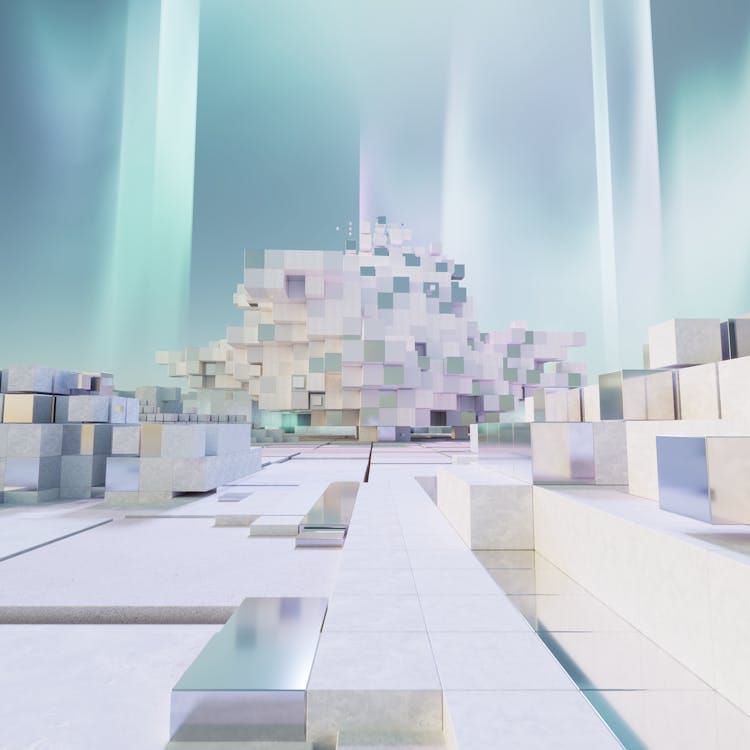

Animation Created With Human Prompt On Replit

For teams shipping product, this is a different class of tool.

Think:

Launch explainer videos

Onboarding walkthroughs

Investor demo clips

Growth experiments

Product feature highlights

Instead of bouncing between Figma → After Effects → export → developer tweaks → re-render, you stay inside one environment.

Prompt.

Adjust.

Ship.

For engineers and technical founders, that’s a cleaner loop.

What’s The HYPE?

What this signals about Replit

Replit isn’t just improving its coding assistant.

It’s expanding the surface area of what “building” means.

If you can generate backend services, front-end apps, and now motion assets — all through the same conversational interface — the IDE becomes a generalized creation layer.

Prompt To Video UI Shown In Replit Launch Video

This aligns with a broader pattern we’re seeing: AI tools aren’t replacing jobs in isolation. They’re compressing workflows.

Every time a boundary disappears between tools, the stack gets tighter.

Replit is quietly moving from “AI that helps you code” to “AI that helps you produce everything around the code.”

That’s a bigger shift than it looks on the surface.

Optimize Documents With AI!

AI Agents Are Reading Your Docs. Are You Ready?

Last month, 48% of visitors to documentation sites across Mintlify were AI agents—not humans.

Claude Code, Cursor, and other coding agents are becoming the actual customers reading your docs. And they read everything.

This changes what good documentation means. Humans skim and forgive gaps. Agents methodically check every endpoint, read every guide, and compare you against alternatives with zero fatigue.

Your docs aren't just helping users anymore—they're your product's first interview with the machines deciding whether to recommend you.

That means:

→ Clear schema markup so agents can parse your content

→ Real benchmarks, not marketing fluff

→ Open endpoints agents can actually test

→ Honest comparisons that emphasize strengths without hype

In the agentic world, documentation becomes 10x more important. Companies that make their products machine-understandable will win distribution through AI.

Bigger Than Just Animations

The bigger pattern: IDE as media engine

Zoom out for a second.

The IDE used to be where you wrote logic.

Now it’s where you generate UI, write tests, refactor architecture, draft docs, spin up infra — and increasingly, produce the assets around the product.

Animation is a signal that the boundary between “code” and “media” is dissolving.

If your AI agent can:

Write your feature

Generate the UI

Produce the demo video

Draft the launch copy

…then the IDE becomes a full production environment, not just a coding workspace.

That has second-order effects.

It reduces coordination overhead between engineering, design, and marketing. It shortens feedback loops. It favors smaller, more autonomous teams.

For early-stage startups especially, this compounds.

The fewer tool switches and human handoffs required, the faster you ship.

The Future Is Bright (Probably)

Bottom line

Replit’s animation release isn’t about competing with film-grade AI video.

It’s a signal that development environments are becoming general production environments.

The interesting question isn’t whether the animations are perfect.

It’s whether more of the surrounding work — the assets, demos, visual explainers — starts living inside the same AI-native workflow as the code itself.

That shift, if it continues, changes how teams structure work far more than any single feature release.